Select the version of your OS from the tabs below. If you don't know the version you are using, run the command cat /etc/os-release or cat /etc/issue on the board.

In AI at the Edge Demo, Toradex shows an Object Detection algorithm to differentiate between some kinds of pasta and show the module's capabilities for Computer Vision.

Amazon SageMaker Neo enables developers to train machine learning models once and run them anywhere in the cloud and at the edge. In this article, we will show a guideline of the process to train a new custom Object Detection (SSD) MXNET model and cross-compile it using SageMaker Neo targeting i.MX8 processors. After this session, you will able to generate a model file trained with your images to be executed in i.MX8.

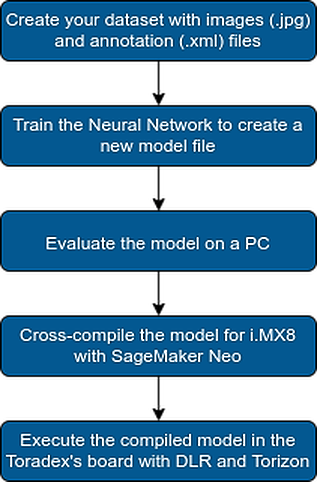

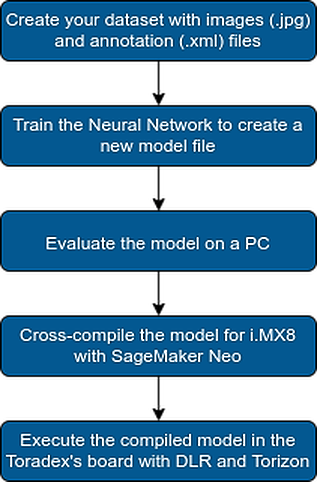

The training process consists of some macro-step that are illustrated in the picture below. The objective of this article is to provide an overview of each macro-step. Click on each of them to go to the corresponding section.

LabelIMG is a good tool to generate JSON files to annotate jpg images

You need to collect as many images in different light conditions and positions as you can

For the AI at the Edge, Pasta Detection Demo with AWS, we tagged 3,267 pictures in 5 classes (Penne, Elbow, Farfalle, Shell and Tortellini). Toradex provides the demo's dataset as an example.

Yes, even using tools, tagging images is a little bit boring process.

The next step is to train an SSD model with our custom dataset. In this article, we will use MXNET framework to generate the neural network model in a format that can be exported for other tools. A good implementation of SSD in MXNET is given by GluonCV.

The first step is to get familiar with GluonCV. We recommend you to execute the [SSD demo in developer tutorial page] (https://gluon-cv.mxnet.io/build/examples_detection/demo_ssd.html) to get familiar with the algorithm.

After getting familiar with GluonCV, you can execute the training to generate a new model file trained with your custom dataset. GluonCV provides a good single-file python script for training. https://gluon-cv.mxnet.io/build/examples_detection/train_ssd_voc.html

On the GluonCV website, download the script for training. Put the train_ssd.py script on the same folder as your dataset. To start the training, execute:

$ python3 train_ssd.py --epochs 60 --num-workers 8 --batch-size 4 --network mobilenet1.0 --data-shape 512

This process takes a long time ( you can expect some hours to execute the training). After it finished, go to the model folder to obtain the .params and .json files corresponding to your model.

For more information about the training process with GluonCV, visit the [developer's tutorial page] (https://gluon-cv.mxnet.io/tutorials/index.html)

Go back to the SSD demo for MXNET. Modify it to load the .params and .json files of the model you just trained. After some evaluations, when you think your model is accurate enough, you can go to the next step to cross-compile it for iMX8 using SageMaker Neo.

The next step is to cross-compile this model to be executed on an ARM CPU. This is the time we use SageMaker Neo will.

You can compile a model using AWS CLI tools or AWS console. To simplify the tutorial, we will show how to do that on the console.

Open SageMaker Neo console. Select inference->Compilation Jobs:

Create a compilation job indicating the location of the .params and .json file on an S3 bucket. We will define the input size of our network as a frame of 224x224 RGB. Select nxp_i.MX8QM as the target device. Click on Create.

Click on the Create button. The compiled model will be available in your S3 folder after it finishes.

Refer to the SageMaker Neo documentation for more information about console usage.

Congratulations! You just compiled and tuned your model for iMX8! To run this new compiled model in Apalis Board, refer to the Executing models tuned by SageMaker Neo in Torizon using Docker article.

In AI at the Edge Demo, Toradex shows an Object Detection algorithm to differentiate between some kinds of pasta and show the module's capabilities for Computer Vision.

Amazon SageMaker Neo enables developers to train machine learning models once and run them anywhere in the cloud and at the edge. In this article, we will show a guideline of the process to train a new custom Object Detection (SSD) MXNET model and cross-compile it using SageMaker Neo targeting i.MX8 processors. After this session, you will able to generate a model file trained with your images to be executed in i.MX8.

The training process consists of some macro-step that are illustrated in the picture below. The objective of this article is to provide an overview of each macro-step. Click on each of them to go to the corresponding section.

LabelIMG is a good tool to generate JSON files to annotate jpg images

You need to collect as many images in different light conditions and positions as you can

For the AI at the Edge, Pasta Detection Demo with AWS, we tagged 3,267 pictures in 5 classes (Penne, Elbow, Farfalle, Shell and Tortellini). Toradex provides the demo's dataset as an example.

Yes, even using tools, tagging images is a little bit boring process.

The next step is to train an SSD model with our custom dataset. In this article, we will use MXNET framework to generate the neural network model in a format that can be exported for other tools. A good implementation of SSD in MXNET is given by GluonCV.

The first step is to get familiar with GluonCV. We recommend you to execute the [SSD demo in developer tutorial page] (https://gluon-cv.mxnet.io/build/examples_detection/demo_ssd.html) to get familiar with the algorithm.

After getting familiar with GluonCV, you can execute the training to generate a new model file trained with your custom dataset. GluonCV provides a good single-file python script for training. https://gluon-cv.mxnet.io/build/examples_detection/train_ssd_voc.html

On the GluonCV website, download the script for training. Put the train_ssd.py script on the same folder as your dataset. To start the training, execute:

$ python3 train_ssd.py --epochs 60 --num-workers 8 --batch-size 4 --network mobilenet1.0 --data-shape 512

This process takes a long time ( you can expect some hours to execute the training). After it finished, go to the model folder to obtain the .params and .json files corresponding to your model.

For more information about the training process with GluonCV, visit the [developer's tutorial page] (https://gluon-cv.mxnet.io/tutorials/index.html)

Go back to the SSD demo for MXNET. Modify it to load the .params and .json files of the model you just trained. After some evaluations, when you think your model is accurate enough, you can go to the next step to cross-compile it for iMX8 using SageMaker Neo.

The next step is to cross-compile this model to be executed on an ARM CPU. This is the time we use SageMaker Neo will.

You can compile a model using AWS CLI tools or AWS console. To simplify the tutorial, we will show how to do that on the console.

Open SageMaker Neo console. Select inference->Compilation Jobs:

Create a compilation job indicating the location of the .params and .json file on an S3 bucket. We will define the input size of our network as a frame of 224x224 RGB. Select nxp_i.MX8QM as the target device. Click on Create.

Click on the Create button. The compiled model will be available in your S3 folder after it finishes.

Refer to the SageMaker Neo documentation for more information about console usage.

Congratulations! You just compiled and tuned your model for iMX8! To run this new compiled model in Apalis Board, refer to the Executing models tuned by SageMaker Neo in Torizon using Docker article.